Model choice

Below is a table of model providers that come pre-packaged with chatlas.

Each model provider has its own set of pre-requisites. For example, OpenAI requires an API key, while Ollama requires you to install the Ollama CLI and download models. To see the pre-requisites for a given provider, visit the relevant usage page in the table below.

| Name | Usage | Enterprise? |

|---|---|---|

| Anthropic (Claude) | ChatAnthropic() |

|

| AWS Bedrock | ChatBedrockAnthropic() |

✅ |

| OpenAI | ChatOpenAI() |

|

| Azure OpenAI | ChatAzureOpenAI() |

✅ |

| Google (Gemini) | ChatGoogle() |

|

| Google (Vertex) | ChatVertex() |

✅ |

| GitHub model marketplace | ChatGithub() |

|

| Ollama (local models) | ChatOllama() |

|

| Open Router | ChatOpenRouter() |

|

| DeepSeek | ChatDeepSeek() |

|

| Hugging Face | ChatHuggingFace() |

|

| Databricks | ChatDatabricks() |

✅ |

| Snowflake Cortex | ChatSnowflake() |

✅ |

| Mistral | ChatMistral() |

✅ |

| Groq | ChatGroq() |

|

| perplexity.ai | ChatPerplexity() |

|

| Cloudflare | ChatCloudflare() |

|

| Portkey | ChatPortkey() |

✅ |

To use chatlas with a provider not listed in the table above, you have two options:

- If the model provider is “OpenAI compatible” (i.e., it can be used with the

openaiPython SDK), useChatOpenAI()with the appropriatebase_urlandapi_key.- When providers say they are “OpenAI compatible”, they usually mean compatible with the Completions API. In this case, use

ChatOpenAICompletions()instead ofChatOpenAI()(the latter uses the newer Responses API).

- When providers say they are “OpenAI compatible”, they usually mean compatible with the Completions API. In this case, use

- If you’re motivated, implement a new provider by subclassing

Providerand implementing the required methods.

Some providers may have a limited amount of support for things like tool calling, structured data extraction, images, etc. In this case, the provider’s reference page should include a known limitations section describing the limitations.

Model choice

In addition to choosing a model provider, you also need to choose a specific model from that provider. This is important because different models have different capabilities and performance characteristics. For example, some models are faster and cheaper, while others are more accurate and capable of handling more complex tasks.

If you’re using chatlas inside your organisation, you’ll be limited to what your org allows, which is likely to be one provided by a big cloud provider (e.g. ChatAzureOpenAI() and ChatBedrockAnthropic()). If you’re using chatlas for your own personal exploration, you have a lot more freedom so we have a few recommendations to help you get started:

ChatOpenAI()orChatAnthropic()are both good places to start.ChatOpenAI()defaults to GPT-4.1, but you can usemodel = "gpt-4.1-nano"for a cheaper lower-quality model, ormodel = "o3"for more complex reasoning.ChatAnthropic()is similarly good; it defaults to Claude 4.5 Sonnet which we have found to be particularly good at writing code.ChatGoogle()is a strong model with generous free tier (with the downside that your data is used to improve the model), making it a great place to start if you don’t want to spend any money.ChatOllama(), which uses Ollama, allows you to run models on your own computer. The biggest models you can run locally aren’t as good as the state of the art hosted models, but they also don’t share your data and and are effectively free.

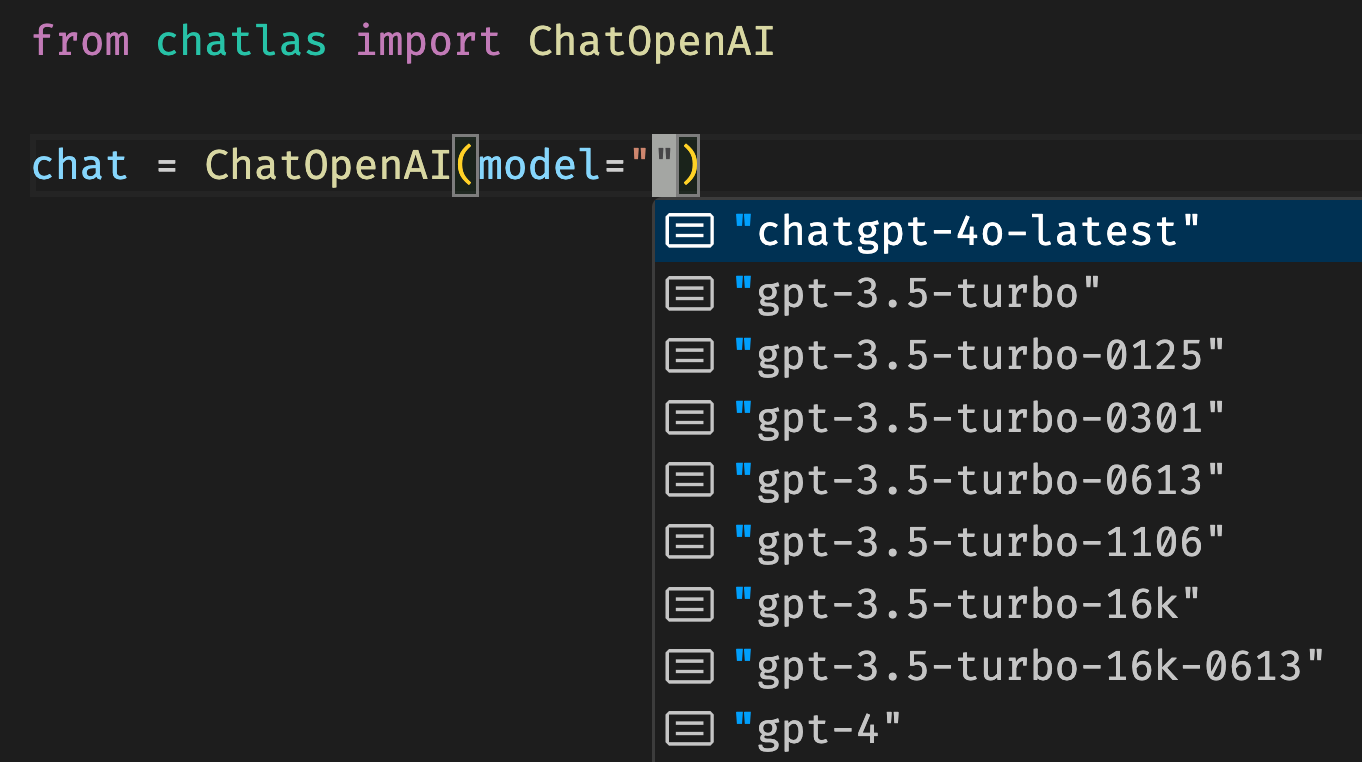

Model type hints

Some providers like ChatOpenAI() and ChatAnthropic() provide type hints for the model parameter. This makes it quick and easy to find the right model id – just enter model="" and you’ll get a list of available models to choose from (assuming your IDE supports type hints).

If the provider doesn’t provide these type hints, try using the .list_models() method (mentioned below) to find available models.

Auto provider

ChatAuto() provides access to any provider/model combination through one simple string. This makes for a nice interactive/prototyping experience, where you can quickly switch between different models and providers, and leverage chatlas’ smart defaults:

from chatlas import ChatAuto

# Default provider (OpenAI) & model

chat = ChatAuto()

print(chat.provider.name)

print(chat.provider.model)

# Different provider (Anthropic) & default model

chat = ChatAuto("anthropic")

# Choose specific provider/model (Claude Sonnet 4)

chat = ChatAuto("anthropic/claude-sonnet-4-0")Listing model info

Most providers support the .list_models() method, which returns detailed information about all available models, including model IDs, pricing, and metadata. This is particularly useful for:

- Discovering what models are available (ordered by most recent).

- Comparing model pricing and characteristics.

- Finding exactly the right model ID to pass to the

Chatconstructor.

from chatlas import ChatOpenAI

import pandas as pd

chat = ChatOpenAI()

models = chat.list_models()

pd.DataFrame(models) id owned_by input output cached_input created_at

0 gpt-5-nano system 0.05 0.4 0.005 2025-08-05

1 gpt-5 system 1.25 10.0 0.125 2025-08-05

2 gpt-5-mini-2025-08-07 system 0.25 2.0 0.025 2025-08-05

3 gpt-5-mini system 0.25 2.0 0.025 2025-08-05

4 gpt-5-nano-2025-08-07 system 0.05 0.4 0.005 2025-08-05

.. ... ... ... ... ... ...

83 gpt-3.5-turbo-16k openai-internal 3.00 4.0 NaN 2023-05-10

84 tts-1 openai-internal NaN NaN NaN 2023-04-19

85 gpt-3.5-turbo openai 1.50 2.0 NaN 2023-02-28

86 whisper-1 openai-internal NaN NaN NaN 2023-02-27

87 text-embedding-ada-002 openai-internal 0.10 0.0 NaN 2022-12-16Different providers may include different metadata fields in the model information, but they all generally include the following key details:

id: Model identifier to use with theChatconstructorinput/output/cached_input: Token pricing in USD per million tokens