Introduction

Explore data using natural language queries

querychat facilitates safe and reliable natural language exploration of tabular data, powered by SQL and large language models (LLMs). For users, it offers an intuitive web application where they can quickly ask questions of their data and receive verifiable data-driven answers. As a developer, you can access the chat UI component, generated SQL queries, and filtered data to build custom applications that integrate natural language querying into your data workflows.

Installation

Install the latest stable release from PyPI:

pip install querychatQuick start

The main entry point is the QueryChat class. It requires a data source (e.g., pandas, polars, etc) and a name for the data. It also accepts optional parameters to customize the behavior, such as the client model. The quickest way to start chatting is to call the .app() method, which by default returns a Shiny app:

app.py

from querychat import QueryChat

from querychat.data import titanic

qc = QueryChat(titanic(), "titanic")

app = qc.app()querychat supports multiple web frameworks—just change the import.

With an API key set1, save the code to app.py and run it:

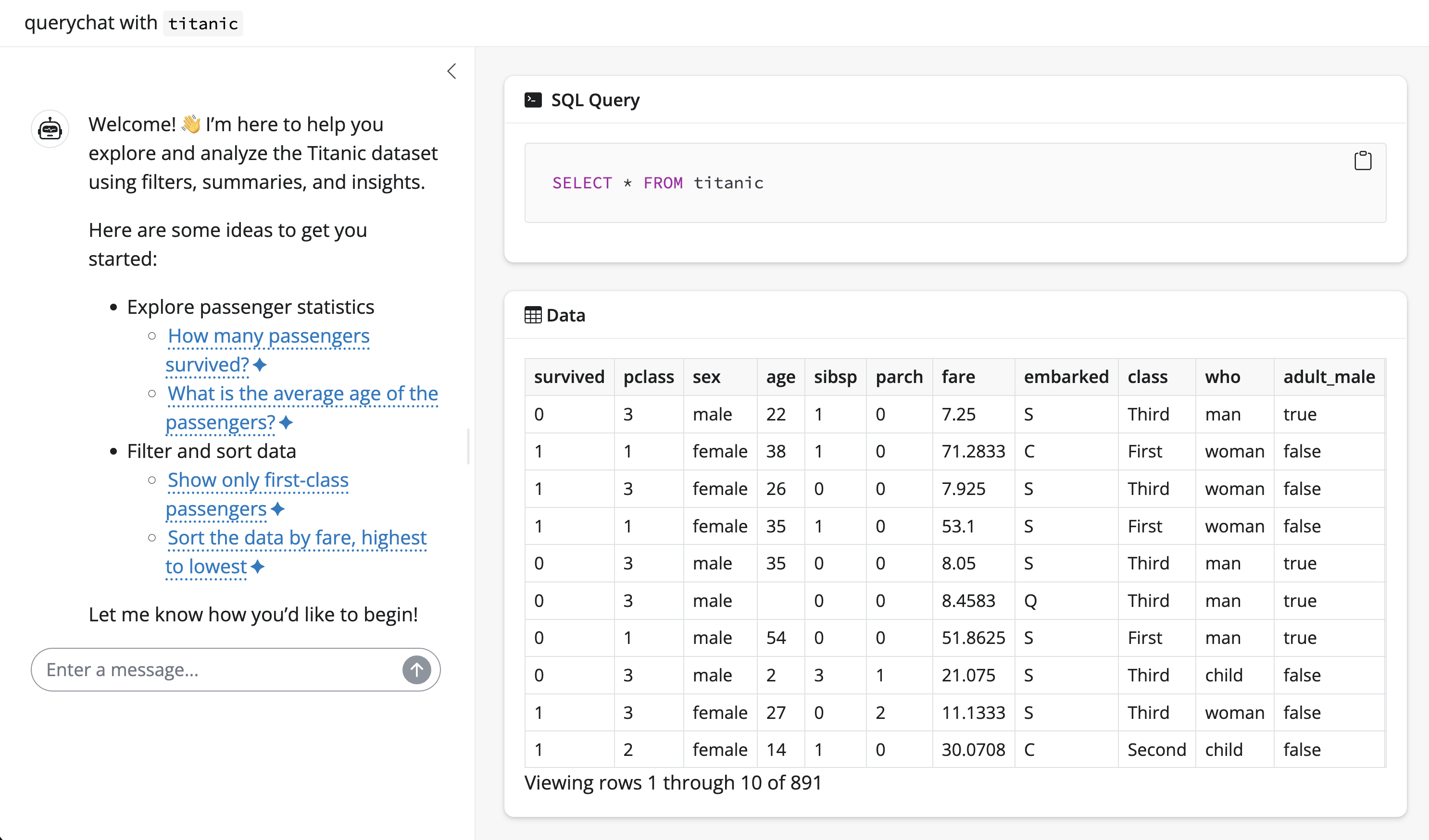

shiny run --reload app.pyOnce running, you’ll notice 3 main views:

- A sidebar chat with suggestions on where to start exploring.

- A data table that updates to reflect filtering and sorting queries.

- The SQL query behind the data table, for transparency and reproducibility.

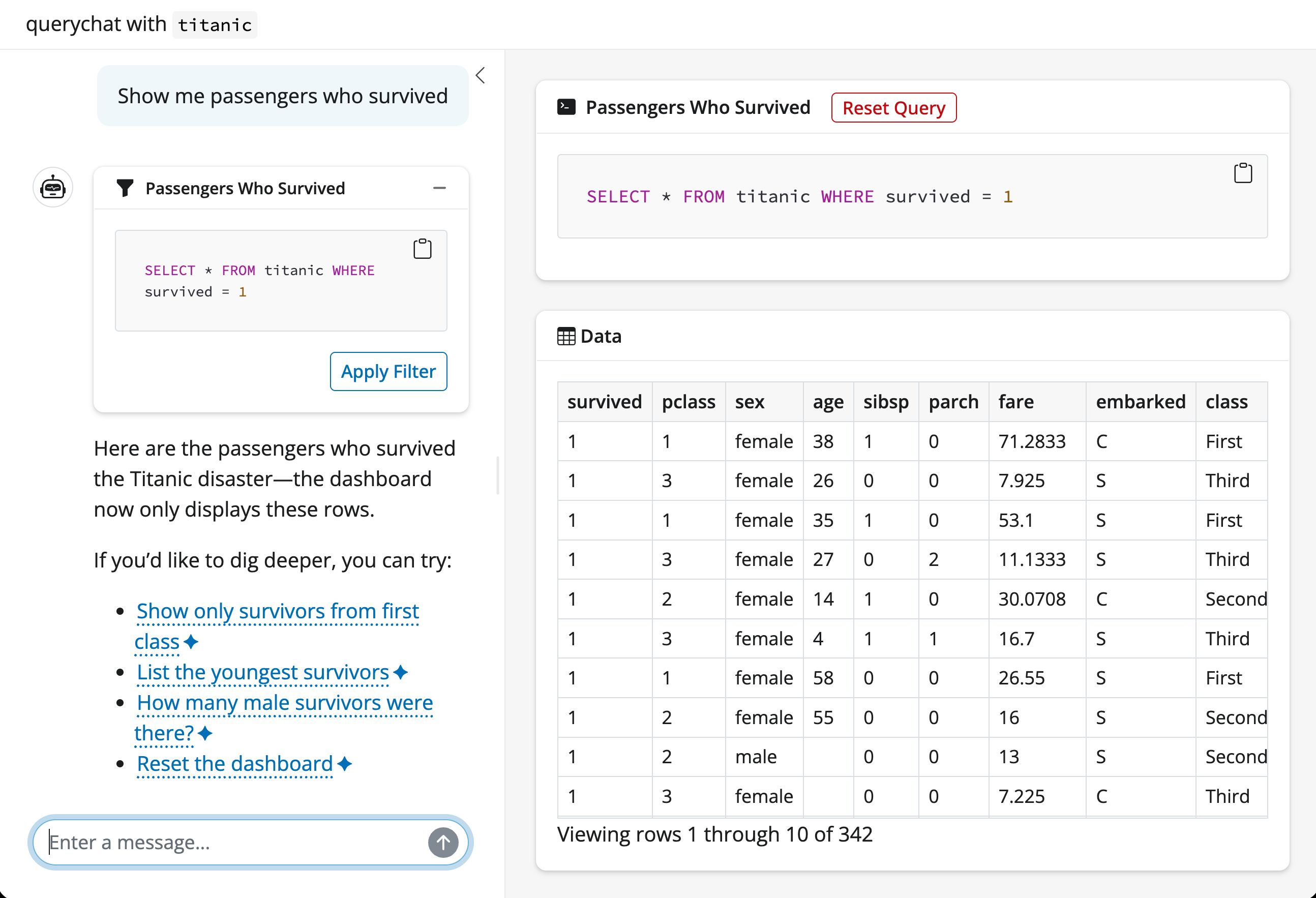

Suppose we pick a suggestion like “Show me passengers who survived”. Since this is a filtering operation, both the data table and SQL query update accordingly.

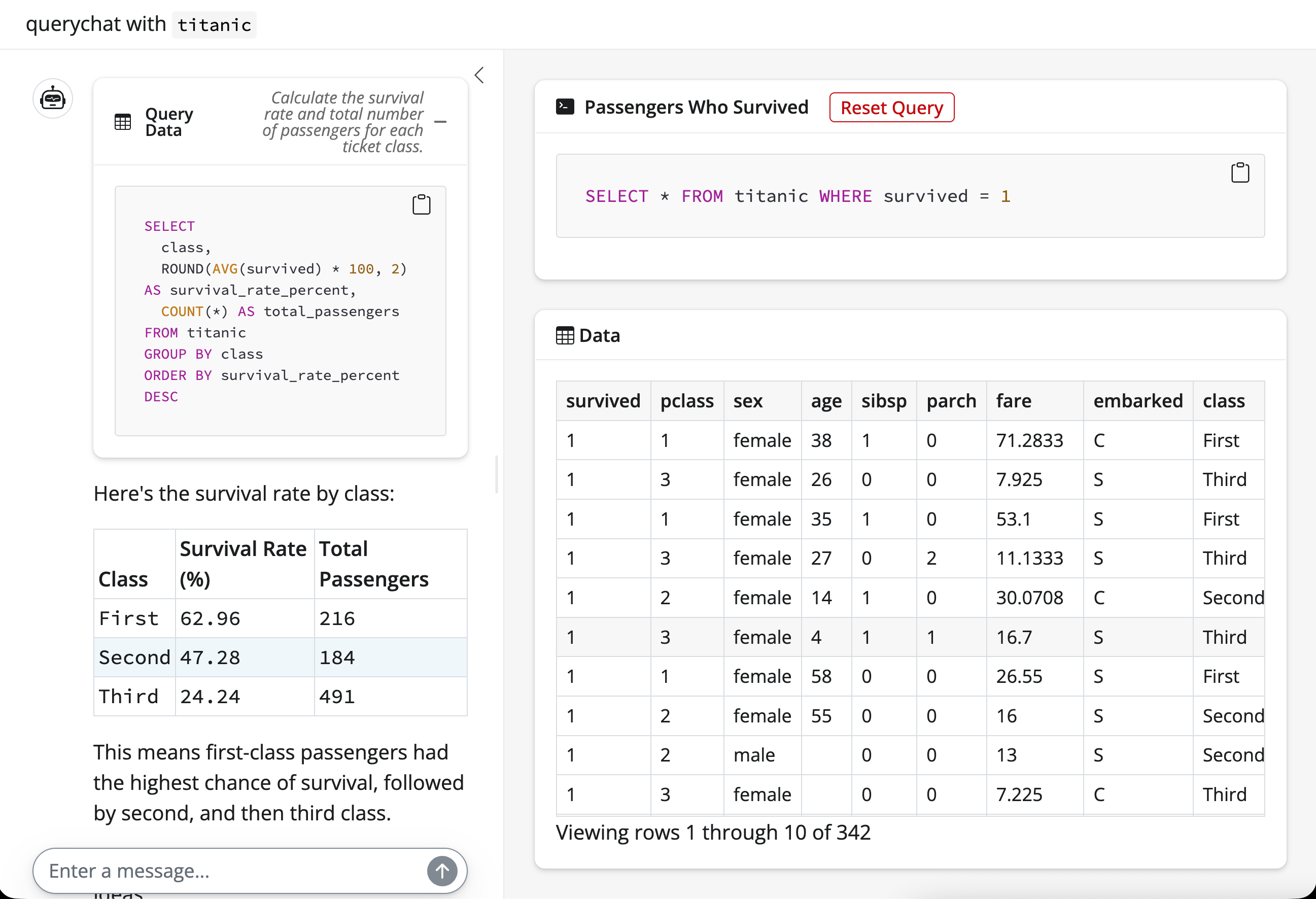

querychat can also handle more general questions about the data that require calculations and aggregations. For example, we can ask “What is the average age of passengers who survived?”. The LLM will generate the SQL query to perform the calculation, querychat will execute it, and return the result in the chat:

Web frameworks

While the examples above use Shiny, querychat also supports Streamlit, Gradio, and Dash. Each framework has its own QueryChat class under the relevant sub-module, but the methods and properties are mostly consistent across all of them.

from querychat.streamlit import QueryChat

from querychat.data import titanic

qc = QueryChat(titanic(), "titanic")

qc.app()from querychat.gradio import QueryChat

from querychat.data import titanic

qc = QueryChat(titanic(), "titanic")

qc.app().launch()from querychat.dash import QueryChat

from querychat.data import titanic

qc = QueryChat(titanic(), "titanic")

qc.app().run()Install the framework you need with optional dependencies:

pip install "querychat[streamlit]" # or [gradio] or [dash]Build custom apps

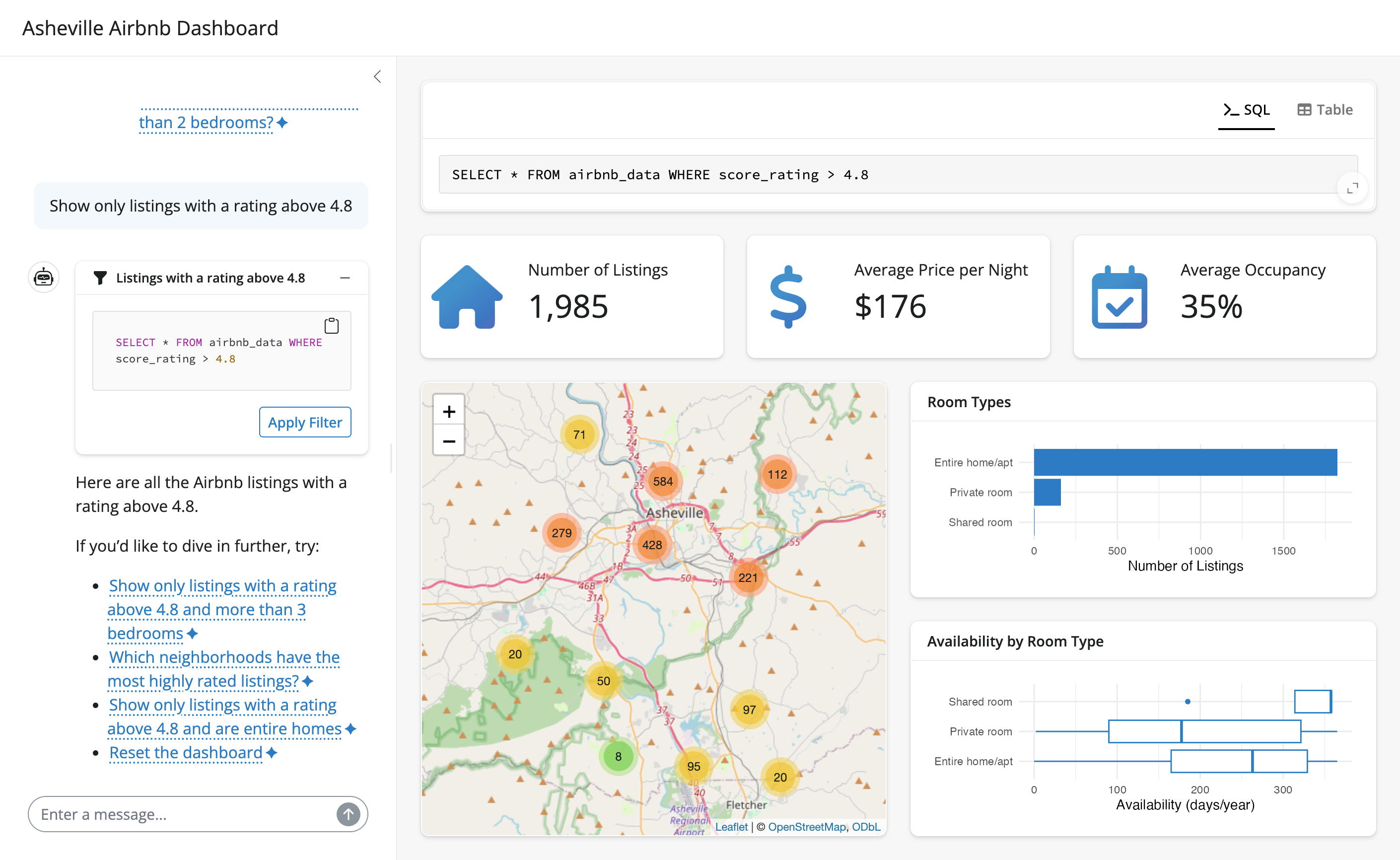

querychat is designed to be highly extensible – it provides programmatic access to the chat interface, the filtered/sorted data frame, SQL queries, and more. This makes it easy to build custom web apps that leverage natural language interaction with your data. For example, here’s a bespoke app for exploring Airbnb listings in Ashville, NC:

To learn more, see the build guides for your framework: Shiny, Streamlit, Gradio, or Dash.

How it works

querychat uses LLMs to translate natural language into SQL queries. Models of all sizes, from small ones you can run locally to large frontier models from major AI providers, are remarkably effective at this task. But even the best models need to understand your data’s overall structure to perform well.

To address this, querychat includes schema metadata – column names, types, ranges, categorical values – in the LLM’s system prompt. Importantly, querychat does not send raw data to the LLM; it shares only enough structural information for the model to generate accurate queries. When the LLM produces a query, querychat executes it in a SQL database (DuckDB2, by default) to obtain precise results.

This design makes querychat reliable, safe, and reproducible:

- Reliable: query results come from a real database, not LLM-generated summaries – so outputs are precise, verifiable, and less vulnerable to hallucination3.

- Safe: querychat’s tools are read-only by design, avoiding destructive actions on your data.4

- Reproducible: generated SQL can be exported and re-run in other environments, so your analysis isn’t locked into a single tool.

Data privacy

See the Provide context and Tools articles for more details on exactly what information is provided to the LLM and how customize it.

Next steps

From here, you might want to learn more about:

- Models: customize the LLM behind querychat.

- Data sources: different data sources you can use with querychat.

- Provide context: provide the LLM with the context it needs to work well.

- Build an app: Shiny | Streamlit | Gradio | Dash

Footnotes

By default, querychat uses OpenAI to power the chat experience. So, for this example to work, you’ll need an OpenAI API key. See the Models page for details on how to set up credentials for other model providers.↩︎

DuckDB is extremely fast and has a surprising number of statistical functions.↩︎

The query tool gives query results to the model for context and interpretation. Thus, there is some potential that the model to mis-interpret those results.↩︎

To fully guarantee no destructive actions on your production database, ensure querychat’s database permissions are read-only.↩︎